Starting a travel platform has become increasingly popular in recent years. This can be attributed to the rise of social media, which allows users to

Channel Db2 is an enterprise data platform that helps organizations manage, secure, and store massive amounts of structured and unstructured data. It is a comprehensive

If you are in the cryptocurrency space, you have probably heard of both Polygon (MATIC) and Matic Network. Both projects aim to solve the scalability

If you’re interested in investing in Tesla stocks, there are a few things you should keep in mind. First, Tesla is a volatile stock, so

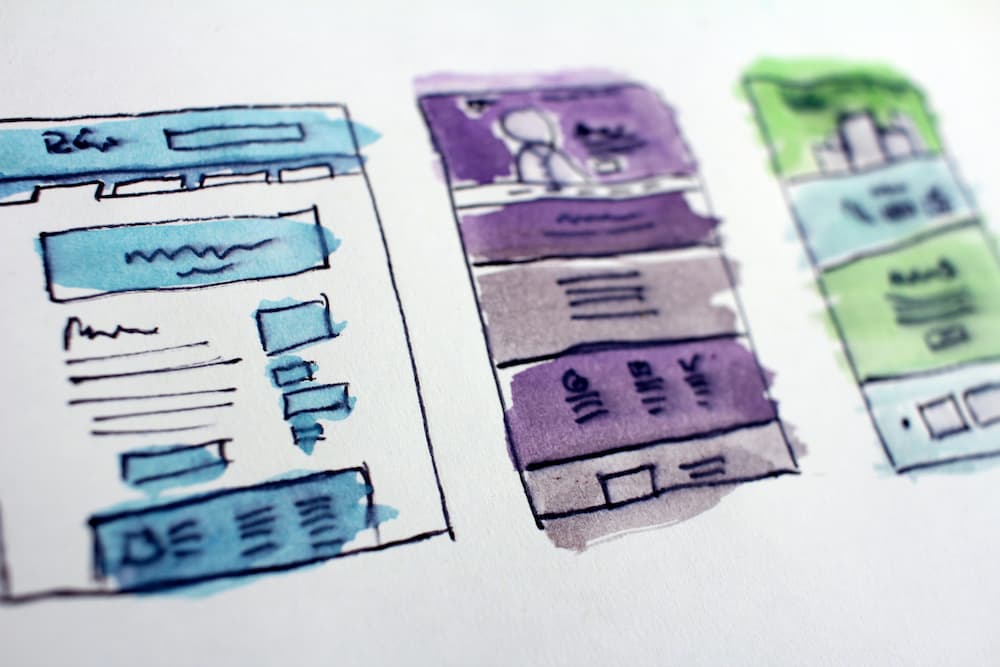

Probably all of you have been on a casino website before, and some of it had to be pretty bad. The reason for this is